Many years ago, when I was just starting out in my IT career, I had the opportunity to work on the trading floor of a major oil company. I was tasked with helping the oil traders use huge, complex Excel spreadsheets to make decisions about the future prices of oil that could potentially be worth millions of dollars. It was an eye–opening experience that taught me a lot about the inner workings of the financial industry.

These traders had the classic high–pressure, high–reward jobs where they were constantly trying to make money in the markets and were often under immense stress. Every Friday would be a big pressure release day for them, where they would all go out for a long lunch (mainly delicious curries) and take a break from the markets. It was great to chat with them, as they would talk about the pressures of the job, their motivations for continuing to trade, and their strategies for making money in the markets.

These traders had the classic high–pressure, high–reward jobs where they were constantly trying to make money in the markets and were often under immense stress. Every Friday would be a big pressure release day for them, where they would all go out for a long lunch (mainly delicious curries) and take a break from the markets. It was great to chat with them, as they would talk about the pressures of the job, their motivations for continuing to trade, and their strategies for making money in the markets.

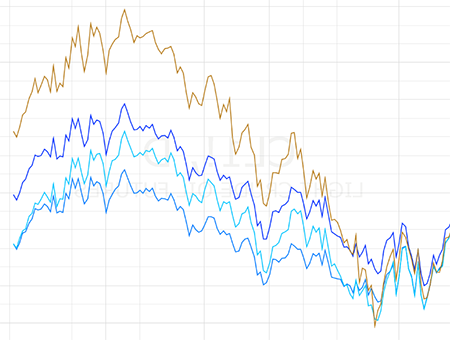

One big thing that has stuck with me from my time as an oil trader is that change is what we are looking for. When it comes to oil trading, the goal is to take a bet on the price of oil going up or down. It‘s the highs and lows of the market that traders are looking for in order to make their money. Worst case scenario for them is a stable oil price, as there is no money to be made in a market that doesn‘t move. This same concept applies to conversion rate optimisation. If a website is not making any changes, then it will not have any success in increasing its conversion rate. Change is necessary for success, and this is something I have carried over from my time working with oil traders.

A loss is still a gain

It is the same for CRO, we test hypotheses to measure the impact of changes. If we see a significant lift, we celebrate and announce it to the world with extrapolations on likely revenue gains. Tests that show no significant shifts at least demonstrate that the changes are not likely to damage conversion rates.

What I have noticed with some companies whose tests show significant loss is a tendency to accept it as a learning opportunity and use it to inform the next step of the CRO program. These tests should be celebrated as much as the ones that display a lift, as they provide invaluable insight into the customer experience and can be used to identify areas of improvement. A 1 million dollar loss is as important as a 1 million dollar gain, as it allows teams to identify aspects of the website that are not resonating with customers, and take steps to address them. The analysis and the distribution audience should be the same, regardless of the outcome, as it demonstrates the value of the testing program and highlights the need to test as many proposed changes to the website as possible.

Even the smallest, most seemingly insignificant change can have a major effect on conversion rates and overall revenue – however, these changes are hard to identify just by looking at traditional analytics reports. If a test results in a loss, it should be investigated to determine what caused the decrease in performance. This will then help form hypotheses for future tests and create a more comprehensive CRO (Conversion Rate Optimization) roadmap.

Of course we do love to celebrate successes, but don‘t put your CRO losses in the corner! It is important to analyze and learn from the tests that didn‘t work out as expected. Running a test enables you to attribute lifts or dips to a specific site change, this way you can evaluate the performance of your changes and make data–driven decisions to improve your site‘s performance.

If you‘re interested in discussing how Kraken Data can help you optimise your CRO program to maximise your return on investment, please get in touch with us. You can reach us by emailing ([email protected]) or by using our contact form. We look forward to hearing from you.

5 reasons why a negative result from a CRO test can be useful

- It helps to confirm hypotheses about user behavior. By running an A/B test and obtaining a negative result, you can confirm that the changes made to the user experience did not have the desired effect. This can help you to make more informed decisions about how to improve the user experience in the future.

- It allows you to focus on other areas of improvement. By running an A/B test and obtaining a negative result, you can identify other areas where you can focus your efforts to improve the user experience.

- It helps you to identify potential problems. A negative result from an A/B test can help you to identify potential problems with the user experience, such as usability issues or design flaws.

- It helps to identify areas of improvement. By running an A/B test and obtaining a negative result, you can identify areas where you can focus your efforts to improve the user experience.

- It helps to validate assumptions. A negative result from an A/B test can help to validate assumptions about user behavior and can help you to make more informed decisions about how to improve the user experience.